AI with Malayalam Computing!

DebUtsav 2023, Govt. Model Engineering College, Thrikkakara.

Saturday, June 17, 2023

$whoami

- AI Engineer & Team Lead @ Sentient.io

- Volunteer @ Swathanthra Malayalam Computing(SMC)

- xMECian

- Open-source enthusiast

- Not affiliated to OpenAI

Disclaimer

- Nothing in this talk is generated. Unless it’s explicitly marked as from an LLM.

About Malayalam

- Malayalam is my mother tongue.

- Native speakers: 38+ million.

- Spoken in: Kerala, Lakshadweep, Puducherry, wherever Mallus is living.

Malayalam is a morphologically complex language

- It has complex morphology compared to other languages English, Tamil, Hindi, Spanish, Finnish etc.

- Morphology can be calculated by metrics like TTR and MATTR [1], [2]

Types and Tokens

- To be or not to be is a question

- Type count: 7

Token count: 9

Type Token Ratio (TTR) and TTGR

- TTR: Type to token ratio. This is calculated by the formula below.

- TTGR: Type to token growth ratio. This curve is plotted the graph of token count vs type count.

Example

- To be or not to be is a question

TTGR and TTR plot of Malayalam for SMC Corpus of Wikipedia text from K. Manohar et al.

OpenAI Whisper

- I think Whisper1 is the most

under-rated modelreleased by OpenAI. - It was open-sourced on September 21, 2022 by releasing the inference code and pre-trained model weights.

About OpenAI Whisper Model

- Whisper is a computer program which can listen to people talking and write down what they say. (Automatic Speech Recognition Model)

- Whisper can understand people speaking different languages and can even translate what they say into English. (Supports transcription and translation to English)

Whisper Models

| Size | Parameters | Required VRAM | Relative speed |

|---|---|---|---|

| tiny | 39 M | ~1 GB | ~32x |

| base | 74 M | ~1 GB | ~16x |

| small | 244 M | ~2 GB | ~6x |

| medium | 769 M | ~5 GB | ~2x |

| large | 1550 M | ~10 GB | 1x |

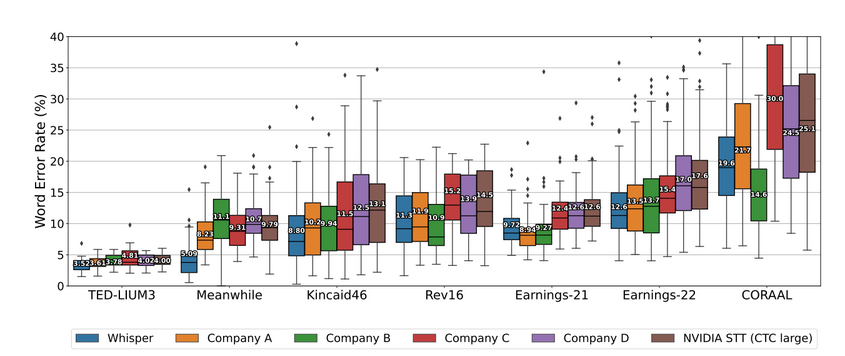

English Speech Recognition

Whisper is competitive with state of art commercial and open source systems. Diagram from whisper research paper p.9

Multi-lingual Speech recognition

- Whisper model is trained on 99 languages

- OpenAI Whisper API supports just 57 languages as some languages performance are not really good.

Runs in almost any device

- Since Whisper followed the open source route, whisper.cpp developed by Georgi Gerganov which is a port of OpenAI’s Whisper model in C/C++.

- It supports the below platforms:

- Mac OS (Intel and ARM)

- iOS

- Android

- Linux/Free BSD

- Web Assembly etc.

Awesome community plugins

- Word-level time stamps with whisper-timestamped,whisperX etc.

- Fine-Tune Whisper is achieving SOTA in lot of languages

- Speaker diarization

- Audio classification using OpenAI’s Whisper

- 4x faster with same accuracy using faster-whisper

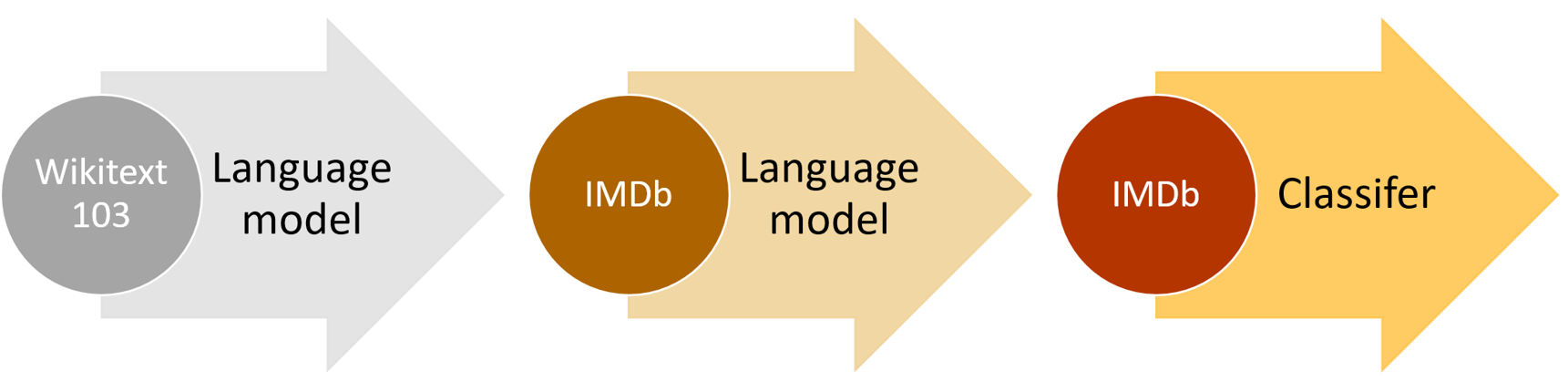

What is fine tuning?

Given a pre-trained model, which is a large model which is trained on a very specific task. If we want to fit it into our specific dataset we will train and use the pre-trained model to build a new model which works very well for our task.

Picture from fast.lesson covering steps in finetuning a text classifier model

Fine tuning is still relevant

What are steps for fine-tuning Whisper?

What are steps for fine-tuning Whisper?

- Preparing Environment

- Load dataset

- Prepare Feature Extractor, Tokenizer and Data

- Training and evaluation

- Building a demo(optional)

Whisper Event

- HuggingFace Team conducted a whisper fine tuning event for 2 weeks from 5th December 2022 to 19th December 2022. The results were out on 23rd December 2022.

- The goal was to to fine-tune the Whisper model to build state-of-the-art speech recognition systems in the languages of our choice 🗣

Malayalam models produced in Whisper Event

- For the language Malayalam, the results are as follows:

Malayalam models performance in whisper event according to leaderboard

Winning models in Malayalam in Whisper Event

- The winning model for Common voice:

thennal/whisper-medium-ml - The winning model for Fleurs:

parambharath/whisper-small-ml

Question Time

- Name three Malayalam fonts? (Hint: SMC makes a lot of fonts)

- Who developed the user friendly GNU/Linux distribution called Slynux during his high school? (Hint: He is an xMECian)

I was not convinced

- Didn’t trust the Hugging Face way of evaluating models.

thennal/whisper-medium-ml model card readme

I was not convinced

- Didn’t trust the Hugging Face way of evaluating models.

Last commit in thennal/whisper-medium-ml

Objective of my benchmarking

- To test whether 10% WER was possible in available academic datasets.

Datasets

- Common Voice 11 malayalam subset

- SMC Malayalam Speech Corpus

Metrics for evaluating ASR models

- ASR evaulation relies on comparission between ground-truth and ASR output.

- Common metrics for ASR evaluation which are popular and good enough1 are :

1. Word Error Rate(WER)

2. Character Error Rate(CER)

I wanted to build something new

- New github project for Malayalam ASR Benchmarking

Time for a new adventure

Methadology for benchmarking

- Create as a python library so further whisper-based transformer models can be benchmark.

- Calculate WER, CER, model size and time taken to benchmark the model for the listed datasets.

- Build a reproducible approach, so results of benchmarking is stored as dataset.

Benchmarked models

- Started with 6 fine-tuned models in Malayalam and compared it with 6 model versions released by OpenAI.

- thennal/whisper-medium-ml

- parambharat/whisper-tiny-ml

- parambharat/whisper-base-ml

- parambharat/whisper-small-ml

- anuragshas/whisper-large-v2-ml

- DrishtiSharma/whisper-large-v2-malayalam

Results on benechmarking in Common Voice dataset

Output from benchmarking tool

WER in Common Voice dataset

Word Error Rate in Common Voice-9 test split

CER in Common Voice dataset

Character Error Rate in Common Voice-9 test split

Results on benechmarking in Malayalam Speech Corpus dataset

Output from benchmarking tool

WER in Malayalam Speech Corpus

Word Error Rate in MSC

CER in Malayalam Speech Corpus

Character Error rate in MSC

Links to Project

Github project

https://github.com/kurianbenoy/malayalam_asr_benchmarking

Links to Project

Benchmarking results

- Results on SMC Malayalam Speech corpus

https://huggingface.co/datasets/kurianbenoy/malayalam_msc_benchmarking/tree/main

- Results on Common Voice 11

https://huggingface.co/datasets/kurianbenoy/malayalam_common_voice_benchmarking

Future Ideas for Benchmarking

- Something very similar to OpenLLM Leaderboard with results of latest malayalam speech models.

- Should include results for Kaldi, Meta’s MMS, Wav2Vec etc.

Open LLM leaderboard in huggingface spaces

Inspired by

- faster-whisper is a reimplementation of OpenAI’s Whisper model using CTranslate2, which is a fast inference engine for Transformer models.

- This implementation is up to 4 times faster than openai/whisper for the same accuracy while using less memory. The efficiency can be further improved with 8-bit quantization on both CPU and GPU.

CTranslate2

- An awesome library for optimizing ML models for production.

- CTranslate2 is a C++ and Python library for efficient inference with Transformer models.

- The project implements a custom runtime that applies many performance optimization techniques such as weights quantization, layers fusion, batch reordering, etc., to accelerate and reduce the memory usage of Transformer models on CPU and GPU.

CTranslate2 Whisper converter

- It had this utility for converting any

whisperbased model to faster-whisper like models.

CTranslate2 Quantization formats

CTranslate2 supports various quantization formats like:

- float16

- int16

- float8

- int8_float8

- No quantization

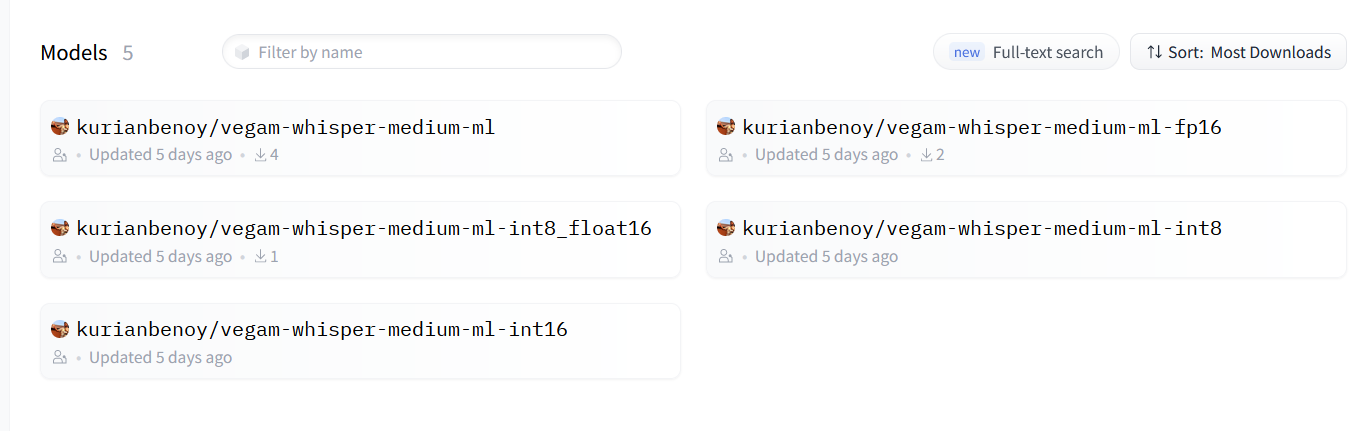

Vegam Whisper models released

- I used

thennal/whisper-medium-mlto convert it to faster-whisper based models for Malayalam:

Vegam Whisper models released

Vegam Whisper models hosted in huggingface

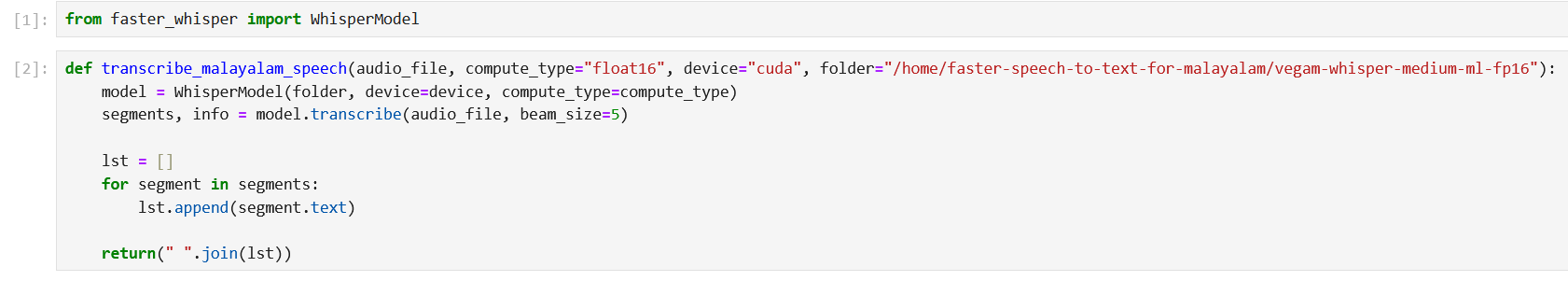

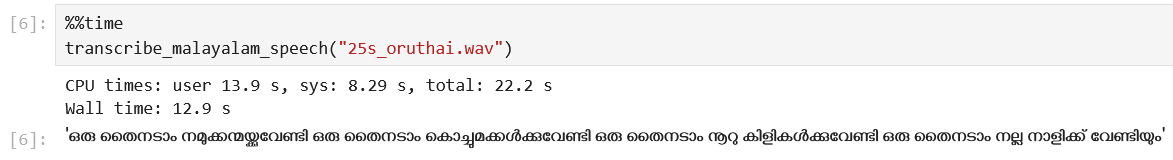

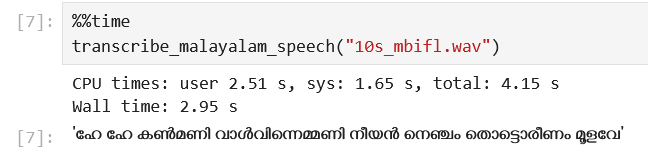

Code for faster-whisper

Source Code

Demo Video -1

Demo Video -1 Output

Output of clip from Video 1

Demo Video -2

Demo Video - 2 Output

Output of clip from Video 2

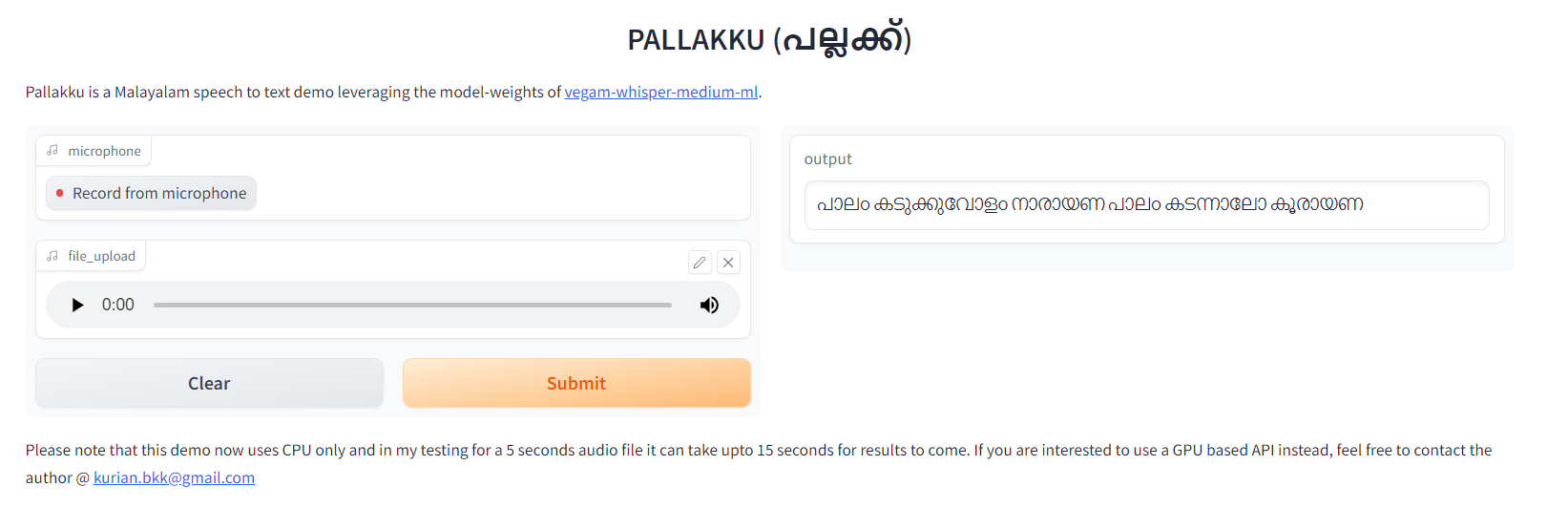

Pallakku

- Pallakku is a Malayalam speech to text demo leveraging the model-weights of vegam-whisper-medium-ml.

- Two options to try it out:

- 🤗 spaces

- GPU-based microservice (coming soon.)

🤗 spaces

- Try it out in link:

https://huggingface.co/spaces/kurianbenoy/Pallakku

Trying GPT in Malayalam

Trying GPT in Malayalam

Asking Questions in Malayalam to GPT-4

Question Time

- Which was the best performing malayalam ASR model according to malayalam_asr_benchmarking results?

- What is the name for debian versions 11 & 12?

Conclusion

- In Malayalam we have achieved phenomenal results for fine tuned whisper models.

- The best model after benchmarking is:

thennal/whisper-medium-ml

Conclusion

- I think their seems to be a good ASR model suitable for production use-cases.

- You can also do it in your own language especially if it is a low resource language.

Thanks to

- OpenAI team - Alec Radford, Jong Wook Kim, Christine McLeavey etc. other authors of Whisper paper

- Creators of CTranslate2 and faster-whisper - Guillaume Klein

- HuggingFace team - Sanchit Gandhi, Nicolas Patry, Vaibhav Srivastav etc.

- Kavya Manohar

- Santhosh Thottingal

- Thennal D K

- AbdulMajedRaja RS

- Georgi Gerganov

- Ramsri Goutham

- Wayde Gilliam

- Other members in SMC.

- Jarvis Labs

Contact me

Kurian Benoy || 17th June, 2023 || AI with Malayalam Computing!