Vegam Whisper Family of Models and demoing Malayalam Speech to Text

Summit 2023 @ Indian Institute of Information Technology, Kottayam (IIIT-K)

Saturday, June 10, 2023

What really matters!

Inspired by

- faster-whisper is a reimplementation of OpenAI’s Whisper model using CTranslate2, which is a fast inference engine for Transformer models.

- This implementation is up to 4 times faster than openai/whisper for the same accuracy while using less memory. The efficiency can be further improved with 8-bit quantization on both CPU and GPU.

CTranslate2

- An awesome library for optimizing ML models for production.

- CTranslate2 is a C++ and Python library for efficient inference with Transformer models.

- The project implements a custom runtime that applies many performance optimization techniques such as weights quantization, layers fusion, batch reordering, etc., to accelerate and reduce the memory usage of Transformer models on CPU and GPU.

CTranslate2 Whisper converter

- It had this utility for converting any

whisperbased model to faster-whisper like models.

CTranslate2 Quantization formats

CTranslate2 supports various quantization formats like:

- float16

- int16

- float8

- int8_float8

- No quantization

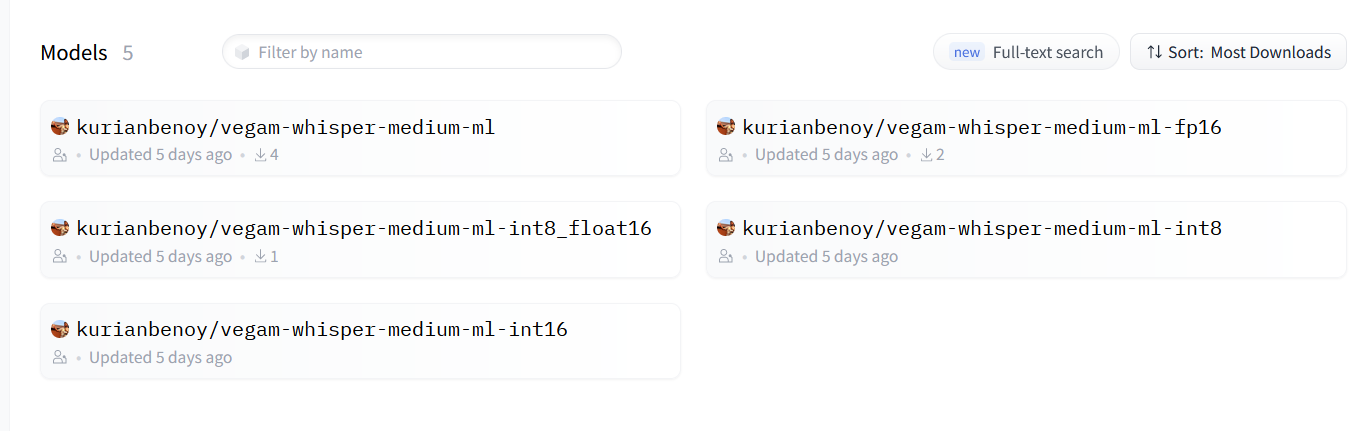

Vegam Whisper models released

- I used

thennal/whisper-medium-mlto convert it to faster-whisper based models for Malayalam:

Vegam Whisper models released

Vegam Whisper models hosted in huggingface

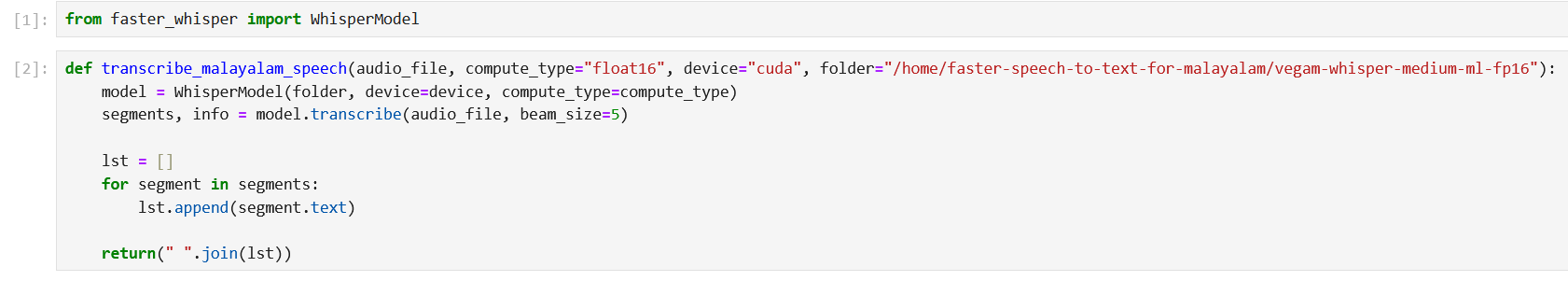

Code for faster-whisper

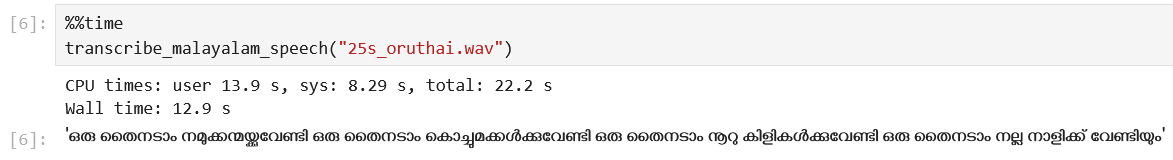

Demo Video -1

Oru Thai Nadam sang by Venugopal and Sreya, Lyrics by Sugathakumari

Demo Video -1 Output

Output of clip from Video 1

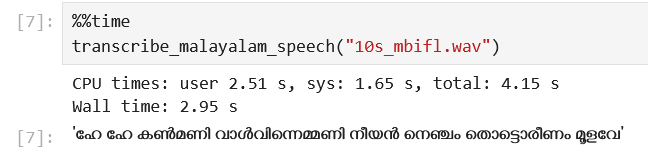

Demo Video -2

Sang by Sithara Krishna Kumar, Lyrics by BK Hari Narayanan. This was a song created spontaneously at MBIFL 2023

Demo Video - 2 Output

Output of clip from Video 2

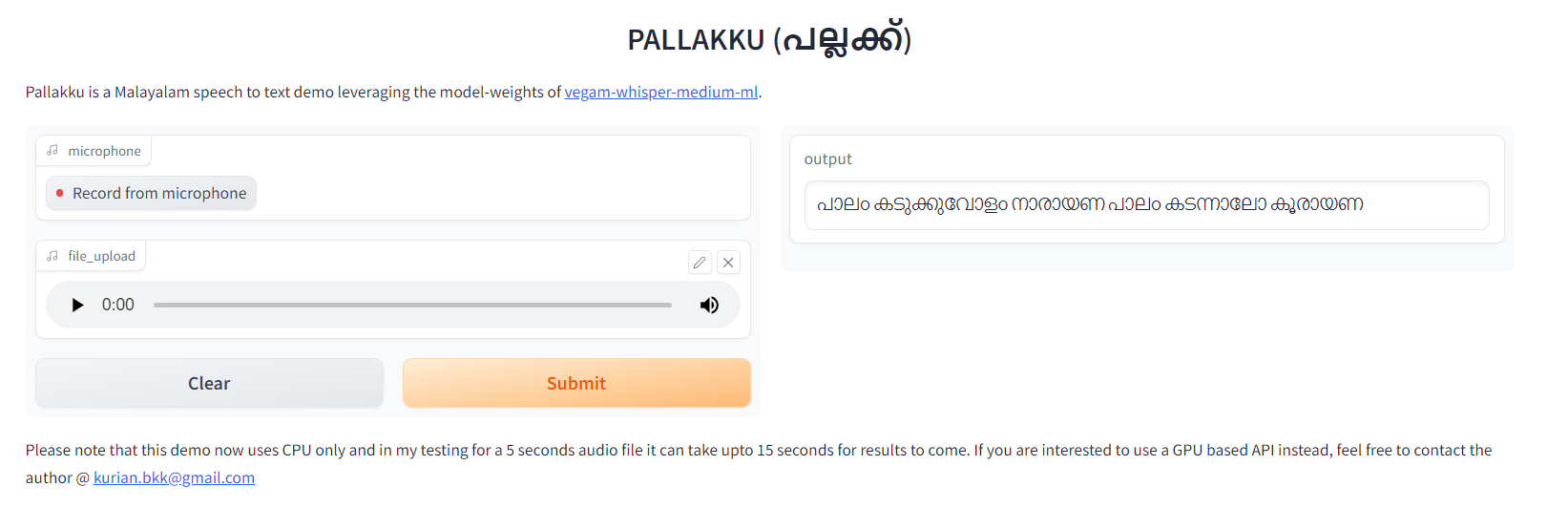

Pallakku

- Pallakku is a Malayalam speech to text demo leveraging the model-weights of vegam-whisper-medium-ml.

- Two options to try it out:

- 🤗 spaces

- GPU-based microservice (coming soon.)

🤗 spaces

- Try it out in link:

https://huggingface.co/spaces/kurianbenoy/Pallakku

Kurian Benoy || Vegam Whisper Family of Models and demoing Malayalam Speech to Text